Linear Regression

Question

根据所提供的数据(房屋面积,卧室数量,房屋售价)通过基本的多元线性回归来进行机器学习拟合,从而实现对于已知面积和卧室数量的房屋的售价预测。

理论基础

多元线性方程可以表示为:

$$

f(x_{1},x_{2},x_{3},…,x_{n})=\theta_{0}+\theta_{1}x_{1}+…+\theta_{n}x_{n}

$$

将其参数与未知数向量化可得:

$$

\begin{array}{}

\Theta =\begin{bmatrix}

\theta_{0}\\

…\\

\theta_{n}

\end{bmatrix}\quad

X=\begin{bmatrix}1\\

x_{1}\\

…\\

x_{n}

\end{bmatrix}

\end{array}

$$

于是

$$

f(x_{1},x_{2},x_{3},…,x_{n})=X^{T}\Theta

$$

costfunction的建立:

m为训练集数据组数

$$

costfunction=\frac{1}{2m}\sum_{i=1}^{m}((X^{i})^{T}\Theta -y^{i})^{2}

$$

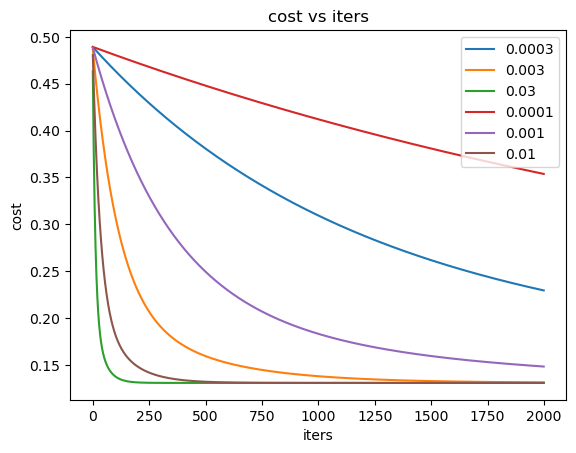

接下来是梯度下降:

将costfunction对于各参数theta求偏导,引入下降参数(决定每步按偏导率下降多少)alpha。注意因为theta0在式子中并没有与未知数x相乘,是一个“常数项”,所以与其他theta的梯度下降公式有所不同

$$

\begin{array}{}

\theta_{0}=\theta_{0}-\frac{\alpha }{m}\sum_{i=1}^{m}((X^{i})^{T}\Theta -y^{i})\\

.\\

.\\

.\\

\theta_{n}=\theta_{n}-\frac{\alpha }{m}\sum_{i=1}^{m}((X^{i})^{T}\Theta -y^{i})x_{n}^{i}

\end{array}

$$

设置恰当的循环次数进行梯度下降,并收集最终训练完成的theta,得到预测函数

$$

f_{final}(x_{1},x_{2},x_{3},…,x_{n})=X^{T}\Theta_{final}

$$

数据读取处理

1 | import numpy as np |

| size | bedrooms | price | |

|---|---|---|---|

| 0 | 2104 | 3 | 399900 |

| 1 | 1600 | 3 | 329900 |

| 2 | 2400 | 3 | 369000 |

| 3 | 1416 | 2 | 232000 |

| 4 | 3000 | 4 | 539900 |

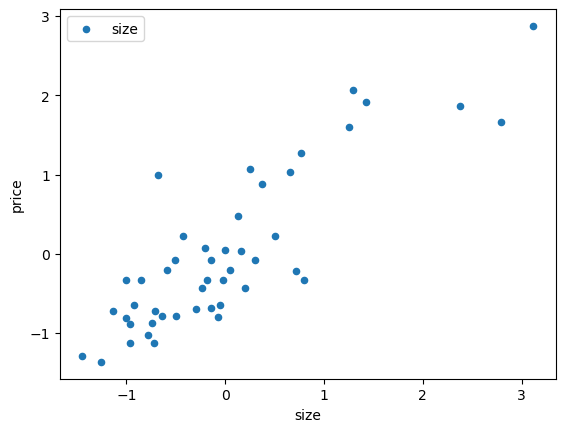

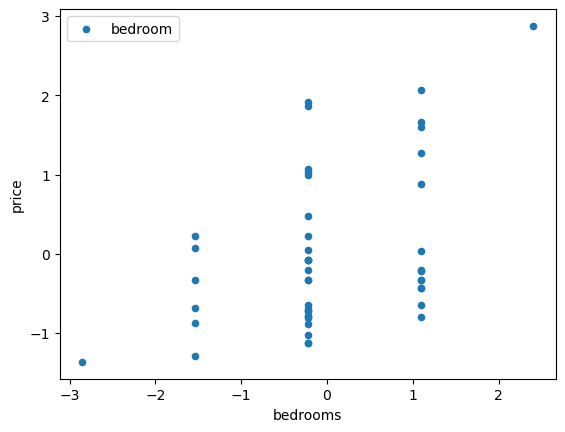

归一化

因为房屋面积和卧室数量以及售价的数字大小差距过大,为防止相应线性参数大小差距也过大,通过归一化将他们缩放到相同量级进行运算,只需最后还原即可

1 | def normalize_feature(data): |

| size | bedrooms | price | |

|---|---|---|---|

| 0 | 0.130010 | -0.223675 | 0.475747 |

| 1 | -0.504190 | -0.223675 | -0.084074 |

| 2 | 0.502476 | -0.223675 | 0.228626 |

| 3 | -0.735723 | -1.537767 | -0.867025 |

| 4 | 1.257476 | 1.090417 | 1.595389 |

1 | data.plot.scatter('size','price',label='size') |

1 | data.plot.scatter('bedrooms','price',label='bedroom') |

1 | data.insert(0,'ones',1) |

| ones | size | bedrooms | price | |

|---|---|---|---|---|

| 0 | 1 | 0.130010 | -0.223675 | 0.475747 |

| 1 | 1 | -0.504190 | -0.223675 | -0.084074 |

| 2 | 1 | 0.502476 | -0.223675 | 0.228626 |

| 3 | 1 | -0.735723 | -1.537767 | -0.867025 |

| 4 | 1 | 1.257476 | 1.090417 | 1.595389 |

1 | X=data.iloc[:,0:-1] |

| ones | size | bedrooms | |

|---|---|---|---|

| 0 | 1 | 0.130010 | -0.223675 |

| 1 | 1 | -0.504190 | -0.223675 |

| 2 | 1 | 0.502476 | -0.223675 |

| 3 | 1 | -0.735723 | -1.537767 |

| 4 | 1 | 1.257476 | 1.090417 |

1 | y.head() |

0 0.475747

1 -0.084074

2 0.228626

3 -0.867025

4 1.595389

Name: price, dtype: float64

1 | X=X.values |

(47, 3)

1 | y=y.values |

(47, 1)

构造损失函数

1 | def costFunction(X,y,theta): |

0.48936170212765967

构造梯度下降

1 | def gradientDescent(X,y,theta,alpha,iters): |

1 | fig,ax=plt.subplots() |

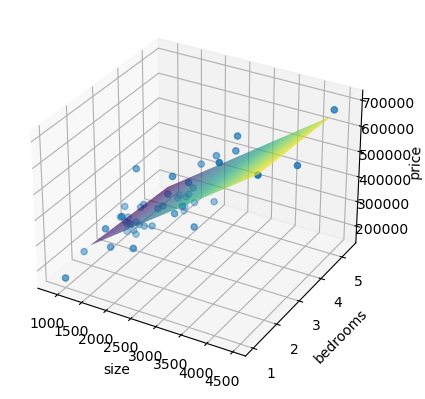

对结果进行归一化还原并画图

1 | x=np.linspace(y.min(),y.max(),100) |

Site

代码(Jupyter)和所用数据: https://github.com/codeYu233/Study/tree/main/Linear%20Regression

Note

该题与数据集均来源于Coursera上斯坦福大学的吴恩达老师机器学习的习题作业,学习交流用,如有不妥,立马删除